How do we know we can trust the images, video, audio or text we see online? The use of AI to create media is making it harder for people to discern between genuine content and inauthentic content. Misinformation and disinformation are a risk to society’s trust in news.

This article discusses how ÃÛÑ¿´«Ã½ Research & Development and the (C2PA) have been researching, designing and contributing to to protect the integrity of digital information. Through our research we want to empower people to be able to make an informed judgement on content by surfacing media provenance information in a clear, understandable and accessible way.

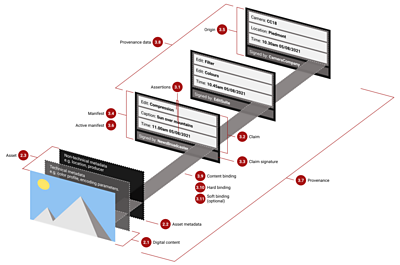

How it works

When content is created, captured or edited using a C2PA-supported device or application, additional information known as provenance — now known across the industry as Content Credentials — can be cryptographically attached, so beginning an edit history. This information is logged through both hardware and software in a way that is objective and tamper-evident. It may include time, date, location, how the piece of content was modified, and whether AI was involved. Once the content is made available, anyone — including you — can view the provenance information about it.

The research

Our research in this area began in 2021 and has helped us establish a greater understanding of:

- what image provenance information is important to display

- how people want to see that provenance information

- and the impact on trust in content when provenance is shown.

What information is important and relevant

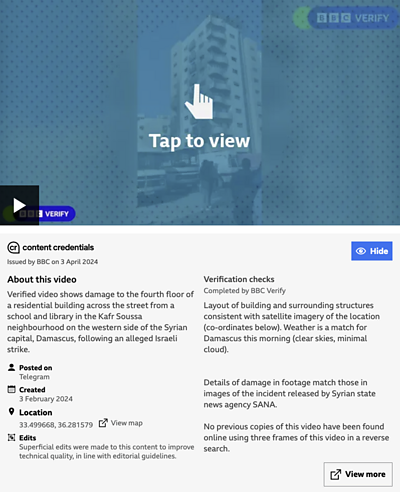

Research told us that people are interested in seeing key information when evaluating images: description, time, date, location, if the image has been verified and the verification checks conducted, who published the image, who the image is owned by, the creator/photographer, any image edits, and if AI was used.

We also noticed that the use of language when surfacing this information was important; some words have certain associations, or can be perceived negatively or positively. There were a few key areas that required more exploration around the use of language: edits, verification and the use of AI.

- Edits: People primarily wanted to know if an image had or had not been edited. Some wanted to know more about the kind of edits, or be told if significant edits had been done. Significant edits were defined as anything that has the potential to change the meaning of the image.

- Verification: People primarily wanted to know if the image had been verified or not. They were also interested in the kind of checks that were done to verify it. They wanted these checks to be conducted by an independent body, and they wanted the ability to learn more about the verification process.

- Generative AI: They primarily wanted to know if generative AI was used. People’s level of digital literacy and use of language was a key factor in users’ perceptions of this. Usage of generative AI was not viewed as acceptable in a news context, other than reporting on the use of AI. They also wanted this information to be flagged clearly to them.

How to show provenance information

We have been looking at how information can be presented in order for people to find it useable, helpful and engaging. C2PA recommend four levels of progressive disclosure, each with more detail:

- the first indicating the information is available

- the second with a summary of the key information users want

- and additional levels with considerably more detail, including some technical descriptions.

A key challenge with disclosing provenance information is that there are large amounts of data which would be overwhelming and time consuming to read. People also have diverse needs; some are less interested in the details or unfamiliar with content production processes, while others are keen to interrogate the content for themselves. So we have been exploring how to show this information in a way that is objective but concise, while answering the various user needs. Our research has focused primarily on the first and second levels, where key information is summarised.

We also explored potential layouts of presenting users with image provenance information. People wanted the information to be consistently displayed across devices and platforms and to be able to easily scan it to find key points of interest. However, they did acknowledge that this may not be possible, given how different organisations may implement the technology on their platforms. From the research, we were able to pull out key visual principles that are important for people when seeing provenance information for images.

It's important to consider how presentation can impact people’s perceptions and ensure that it is done in a way that does not influence them, but allows them to make their own decision. For example, people may want this system to flag content that may be misleading, using a traffic light system, however we know this can lead to misunderstanding and misrepresentation of the facts.

In our first trial, where we began using content credentials on selected ÃÛÑ¿´«Ã½ Verify stories, we also had to consider technical restrictions around what is possible to show to people, such as available provenance information and web/app platform constraints. Our goal was to ensure the overall article experience was not negatively affected, while making the provenance information clearly discoverable to people. We explored a number of possibilities within the constraints we had, and through testing these solutions we identified the drop-down approach as the most effective and desired format.

How provenance information impacts trust

Our quantitative research found that surfacing the information in this way had a primarily neutral impact on trust, with some indication of instances of increased trust. This is true of both users who do and do not use the ÃÛÑ¿´«Ã½. Adding provenance information was also shown to increase trust in content published on ÃÛÑ¿´«Ã½ News for non-ÃÛÑ¿´«Ã½ users.

We were encouraged by a self-selecting, limited audience trial of 1,200 people who answered three multiple choice questions. Their answers suggested that:

- 83% trust the media more after seeing our Content Credentials

- 96% found our Content Credentials useful

- 96% of users say they found our Content Credentials informative

We conducted our tests based on three different types of images: editorial, stock image, and user-generated content (UGC). Adding provenance information served as an equaliser of trust across the image types, with them all eliciting the same levels of trust. Where no provenance information is shown, UGC had the highest levels, followed by stock images and then editorial images.

Achievements and further work

Drawing on these learnings, we have built a lightweight way for journalists to sign and share provenance information of key imagery we publish, giving audiences an insight into not just what we know, but how we know it.

More live trials like this will help us gain a more representative understanding of whether this helps audiences increase their trust in news — something for which audiences and the news industry are striving and will benefit.