More than a decade ago, ÃÛÑ¿´«Ã½ Research & Development’s IP Studio project helped shape the direction of the broadcast technology industry by demonstrating our vision of live production using IP networks. Our early work at IBC 2013, and a major showcase at the 2014 Commonwealth Games showed how broadcasters could benefit from moving away from specialist cabling like SDI to generic network infrastructure. It helped with the development of specifications such as SMPTE ST 2110 and NMOS that are now at the core of broadcast facilities, .

Since then, the compute and network performance offered by public cloud providers has increased hugely, and they provide numerous services to support software developers. Some of this already benefits broadcasters, especially for post-production, distribution, and playout operations - but we want live production to benefit too.

But the huge amount of uncompressed bandwidth used in a typical multi-camera live operation is a challenge for using the public cloud cost-effectively. As a result, we’ve been investigating what is possible by running live software applications on our on-premise OpenStack cloud.

We’re not the only broadcaster interested in this, so we are working with on an initiative called the . This encourages the media industry to focus on building interoperable software media functions that can be provisioned on demand in containers. These ways of working, such as using Kubernetes orchestration, will be familiar to modern cloud developers.

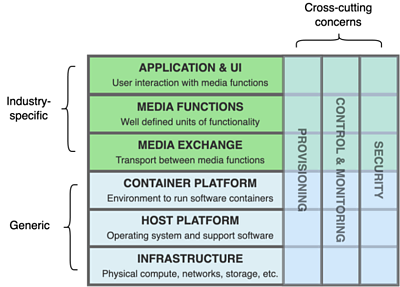

I co-chair the EBU group that is tasked with capturing requirements from its members on the type of benefits expected from a move to all-software working. These include the ability to share resources across multiple productions, provide better resilience for operations, and (by taking advantage of high-speed WAN connectivity) allow more flexible use of broadcasters’ physical locations. We have also proposed for the industry, inspired by similar approaches used for cloud-based infrastructure and applications:

The reference architecture helped hugely with discussions between broadcasters and vendors at IBC 2024, and it soon became clear that there was an appetite to start working collaboratively, identifying Media Exchange as a particular priority. This is because there’s currently no agreed ‘software-friendly’ and low-latency way of sharing high-rate video and audio between applications. This may seem surprising, but standardised streaming protocols either introduce unwanted compression, or with SMPTE ST 2110, were designed more as a networked replacement to SDI and so suffer some legacy overheads and complications.

It would be better to use shared memory protocols such as RDMA (Remote Direct Memory Access), which was initially developed for high-performance computing but is now supported on a wide range of platforms. Several broadcast vendors already have ‘frameworks’ that use shared memory, but of course we can’t recommend something that is controlled by a single company. So, over the last few months, the EBU has worked with and to launch . The idea is to develop an open-source SDK first, rather than the more traditional approach of agreeing a specification first. Based on our observations of other projects, we think this will be more effective!

ÃÛÑ¿´«Ã½ R&D's Peter Brightwell, Paola Sunna of the EBU, and Andy Rayner of Appear introduce this year's live media exchange challenge.

A lot has been happening in the last few months on the first version of MXL, and it will feature significantly at IBC 2025 alongside the Dynamic Media Facility. There will be demonstrations, panel discussions, and meet-ups on the EBU stand as well as an IBC Accelerator collaboration with vendors. MXL will also be present on several vendors’ stands, and there’ll be a conference paper too. See the EBU’s site for an up-to-date list!

Another Accelerator project at IBC 2025 demonstrates . R&D has helped with proof-of-concept trials at RTE in Dublin and is working with ÃÛÑ¿´«Ã½ colleagues and other broadcasters on how DMF and MXL can help take this further.

There’ll be more to come after IBC as the MXL team works towards the v1.0 release of the code and the EBU DMF team will be expanding on its requirements for control and orchestration. And also on how best to capture and preserve timing information through MXL, something that will be essential to make the most of our work on time-addressable media for live operations.

-

Dynamic Media Facility and Media eXchange Layer: towards the second wave of live media production

We are hard at work with the EBU and broadcast vendors on solutions for all-software broadcast facilities based on how clouds are built. -

Content Credentials: The new camera that verifies video at the point of capture

We've been trialing Sony’s innovative new C2PA video camera, capturing our first video with Content Credentials from source. -

TAMS in 2025: A simple tool for advanced workflows

The addition of powerful new features helps time addressable media gain momentum. -

ÃÛÑ¿´«Ã½ R&D at IBC 2025 >

A full listing of work we're showing, talking about and discussing at this year's show.

Search by Tag:

- Tagged with IP Production and Broadcast IP Production and Broadcast

- Tagged with Features Features